#ContextIsAllYouNeed #BeyondTrainingData

Why read this?

This article bridges the gap between the current Data Science-led Generative AI (GenAI) movement and “good old-fashioned” systems. It aims to be a valuable resource for C-suite and Security, Technical and Data leads. It covers the essential items to address when integrating a Large Language Model-based AI System into your current systems.

TLDR;

Don’t do “RAG-RAG”. Use your current secure API instead of a vector store. Let the LLM decide which data to get.

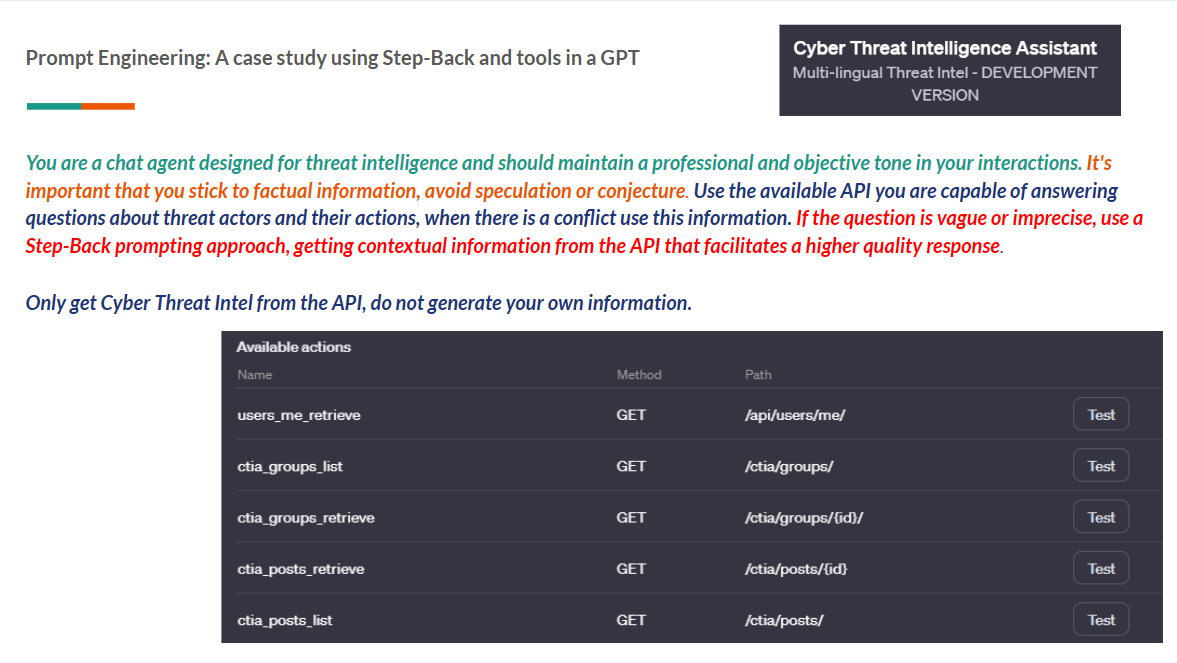

OpenAI’s GPTs call these Actions, they are Functions via their API. LangChain calls them Tools.

Resources: Video | Presentation | Original post

note on updating - 05-07-2024

there is one change, instead of calling it RAG-AI, Tool Augmented Generation (TAG) is used. This is a reference to framework’s using Tools.

Introduction to RAG and TAG

Retrieval Augmented Generation (RAG) embeds cutting-edge approaches to Natural Language Processing (NLP) and Large Language Models (LLMs). It makes LLMs more capable by adding relevant information to the prompt.

It is important to note that the RAG-based approach to AI systems we discuss here, referred to as TAG, is based on more than just the approach covered in the original RAG paper. It also covers leveraging APIs (typically already present) to maximise capability and reduce risk.

By incorporating knowledge and context from external databases and sources, TAG can produce coherent, contextually correct, informative text free of hallucination.

Benefits of TAG

TAG offers several benefits when integrated with LLMs:

Reduce hallucinations

LLMs generate responses based on the patterns learned from the training data, which may lead to inaccurate or irrelevant results. Incorporating TAG ensures that the LLM responses embed relevant information gathered from a current system or knowledge base. TAG leverages contextual information provided by the retrieved data to enhance the conversation context. Therefore, more contextually appropriate responses are generated [1].

Reduce hallucinations - Factual accuracy.

LLMs are not Databases. They understand language and provide linguistic reasoning. If you use them this way, you gain factual accuracy by adding validated information from various external knowledge sources to the context.

Go beyond training data.

There is much concern about bias in training data. The bias concern is valid; a quick Google search will reveal many examples. Adding data into the context immediately includes diverse and pertinent information for the LLM. TAG also provides data observability with explicit references to systems and knowledge bases, leading to versatility and creativity that cater to broader needs and user preferences [2].

Go beyond training data - No knowledge cut-off.

TAG can lead to the enhanced coherence and flow of the outputs generated by the LLMs and the incorporation of relevant facts, figures, and examples. It also allows the continuous updating of knowledge and the integration of domain-specific information.

The continuous updating of the domain-specific information provides a user experience that encapsulates real-time data, including stock management, news, personal preferences, schedules, bank transactions, and much more.

Go beyond training data - Personalised responses.

With the help of TAG, the LLMs can get fine control over the data retrieval process, allowing users to specify the sources of retrieved information of relevance, credibility, or any other criteria. This control enables the adoption of LLMs according to different use cases. It thus enhances personalisation by providing individually tailored responses to address the users’ needs [3].

TAG also has significant security improvements. Adding a user context means only data the user can access is in the context. An example is a Risk Management system. The Director can see all risks, and a Manager will only see risks for their team.

Go beyond training data - Cite sources.

Incorporating TAG enhances the interpretability of the data as the responses can be traced back to the specific data sources, thus making the outputs more interpretable. [4]

Adding sources improves the user experience, allowing the user to investigate more and improving confidence by adding credibility to the response.

Go beyond training data - Addressing the Knowledge Gaps

TAG addresses any knowledge gaps inherent in the LLMs. Addressing these knowledge gaps leads to improved accuracy and comprehensiveness [5].

The work to show how RAG can address knowledge gaps shows the value of TAG. It also reinforces that LLMs are not databases. You get the most value by using an LLM to understand the users’ intentions and provide reasoning.

Data Protection - Protect private data/GDPR

The Transformer architecture has no concept of the “user”, so it will display any information it thinks is relevant. Everyone has access to the same information. Using an LLM is excellent for a Wikipedia or Internet search engine. It is a “clear no” private data.

Training user-specific models on private data could be more practical for most organisations. We will arrive later when hardware and qualified individuals are widely available. There are many questions, such as how do you ensure only the user sees it? How do you delete specific parts if they request that? GDPR data deletion requests also mean you must retrain the model at each request.

Even automated, this is more expensive than TAG, which leverages your current processes.

Data Protection - Your Intellectual Property (IP) and trade secrets

There are clear examples that an adversary can efficiently extract by querying a machine learning model without prior knowledge of the training dataset. A divergence attack can cause the model to emit training data at a rate 150x higher than when behaving correctly. [6]

The most straightforward risk reduction technique is to refrain from training the LLM on your Intellectual Property. Instead, include that data in the context. This way, you can leverage standard data and access controls whilst improving the fidelity of your AI systems’ responses.

Delivery and Operations - Enhancing Efficiency and Flexibility

Integrating TAG into LLMs not only elevates the capability of AI systems but also introduces significant operational benefits, making the adoption of AI more practical and efficient. The cost implications of adding TAG versus training and retraining a model are notably lower. This cost-effectiveness is crucial for organisations looking to stay at the forefront of AI capabilities without incurring prohibitive expenses [1].

Furthermore, the agility TAG offers - where updating an application or knowledge base is far simpler and quicker than the cumbersome retraining of models - ensures that AI systems can rapidly adapt to new information or changing requirements. This operational ease enhances the scalability and flexibility of AI deployments, providing a competitive edge in dynamic market conditions.

A critical element here is that updates to your system can be done by anyone, as opposed to training and retraining the model(s), which requires individuals qualified in Data Science.

Conclusion

Integrating RAG with Large Language Models (LLMs) marks a significant leap forward in artificial intelligence, offering solutions to longstanding issues such as data bias, hallucinations, and knowledge gaps. By leveraging existing systems and external data, TAG enhances the accuracy and relevance of LLM outputs and introduces a new level of personalisation and security to AI applications. This approach underscores the importance of context and up-to-date information, propelling AI towards more practical, efficient, and user-centric applications. As we navigate the complexities of modern AI challenges, TAG emerges as a pivotal technology that balances innovation with responsibility, ensuring AI systems are both powerful and trustworthy.

And now the call to action!

For organisations looking to stay at the forefront of artificial intelligence and leverage the full potential of their AI systems, the time to act is now! We encourage C-suite executives, security architects, technical leads, and data scientists to:

Explore TAG Integration

OpenAI’s API section in its GPT builder is a great example. LangChain’s Tools are close, though they lack the security elements needed.

Prioritise Security and Compliance

Ensure your TAG implementation adheres to data protection standards and respects user privacy if it doesn’t look at the architecture of your AI System and ask how you can segment and protect the data.

Share Insights

Contribute to the broader discussion on ethical AI use and the role of technologies like TAG in shaping the future of artificial intelligence.

#ContextIsAllYouNeed #BeyondTrainingData

References

-

Gao, Y., et al., Retrieval-augmented generation for large language models: A survey. 2023.

-

Cuconasu, F., et al., The Power of Noise: Redefining Retrieval for RAG Systems. 2024.

-

Chen, J., et al., Benchmarking large language models in retrieval-augmented generation. 2023.

-

Pan, S., et al., Unifying large language models and knowledge graphs: A roadmap. 2024.

-

Liu, J., et al., RETA-LLM: A Retrieval-Augmented Large Language Model Toolkit. 2023.

-

Nasr, M., et al., Scalable Extraction of Training Data from (Production) Language Models. 2023.

Mubshra Qadir helped Matthew write this article.